In the world of securities markets, attitudes to AI vary widely. For some, it’s a game changer that is transforming the industry; to others, technological innovation represents an existential risk that threatens the survival of humanity.

As Chair of the Risk Standing Committee of European Securities and Markets Authority (ESMA), we have been closely monitoring AI and its capabilities while also examining the degree of adoption of AI-based tools in the financial sector.

The reality is that while AI can transform the securities market by improving efficiency, increasing accuracy and reduce costs, it is still in its relative infancy. We are only starting to scratch the surface of how AI can enhance our capacity to innovate and interact with the market. That’s why building trust is going to be an essential element to its broad adoption.

One of my key priorities has been to ensure that CySEC keeps pace with developments in AI to ensure it is deployed in a manner that is a safe, trustworthy and responsible, and we can move swiftly to protect investors from new and emerging risks. Regulators cannot afford to bury their heads in the sand.

In a recent report, Artificial Intelligence in EU Securities Markets, ESMA noted that an increasing number of asset managers now leverage AI in the development of their investment strategies, risk management and compliance. Yet only a few have developed a fully AI-based investment process and fewer still advertise their use of AI or machine learning.

While there are clearly numerous opportunities for the application of AI in the securities industry, ESMA’s analysis shows that the actual level of implementation can be very different, both by sector and entity.

Lack of AI Use Is a Challenge for Regulators

Evidence around the use of AI in the European financial markets is still scarce. This presents a challenge for regulators. While we can see that AI has the capacity to strengthen our supervision, and we want to support the digital transformation, to do so, we need to increase monitoring and have access to much better information to fully understand the actual applications of AI and identify the potential risks.

This will enable us to design robust governance and security protocols that safeguard consumer protection and encourage financial stability.

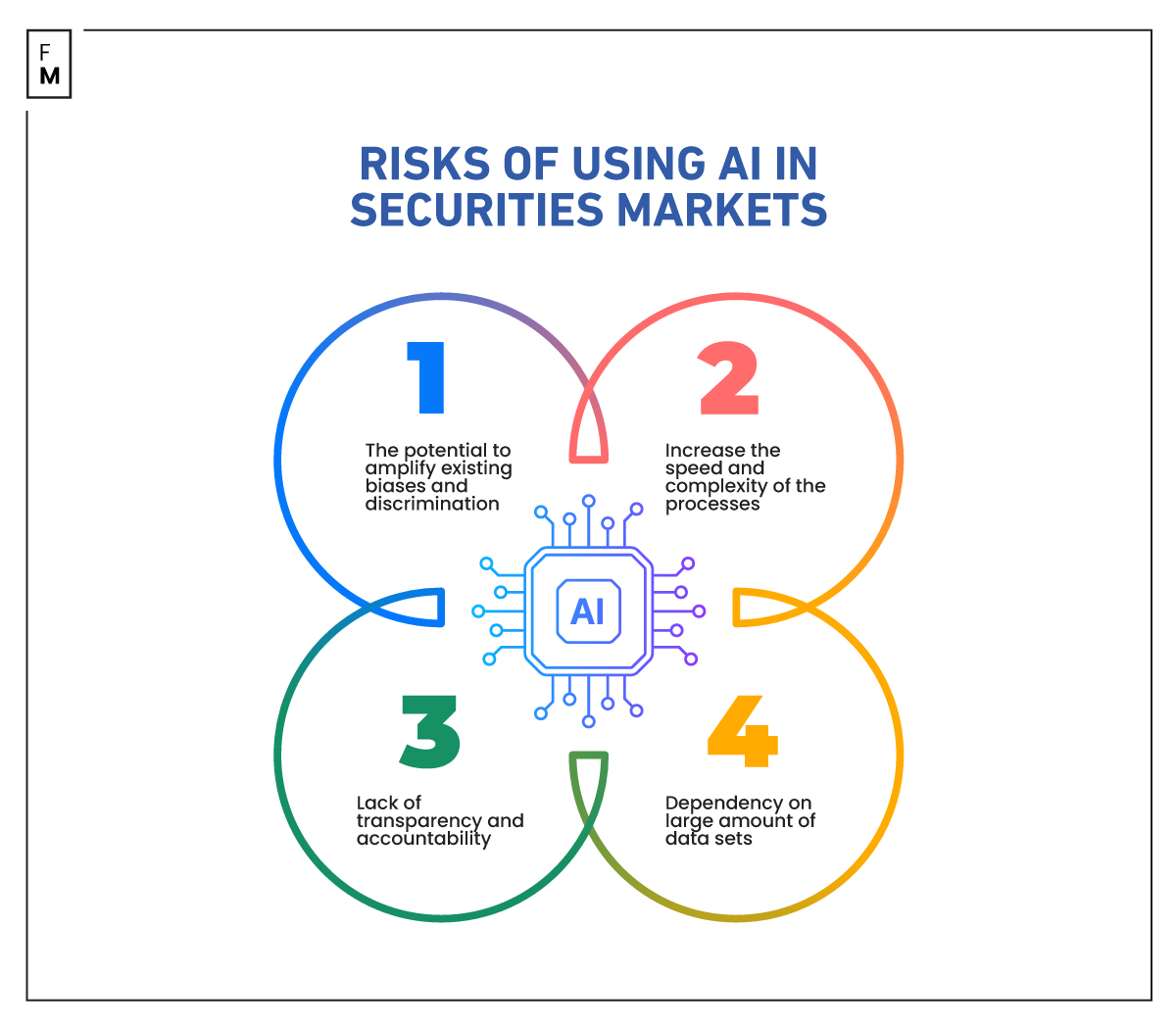

ESMA has highlighted several risks and challenges that must be addressed. One of these is the potential for AI to amplify existing biases and discrimination, a key concern in all areas which the EU’s flagship AI Act aims to address.

In the financial sector, this has implications for market integrity and investor protection. All systems are only as good as the data they are trained on. For firms that want to build relationships with their customers, human oversight will be crucial.

Another risk is that because AI will significantly increase the speed and complexity of the processes being used by the firms we supervise, it will be more difficult for regulators to monitor them in real time. Large amounts of amounts of data will be crucial to the successful use of AI, but as it becomes more complex, there is a risk that it will be less transparent.

Anecdotal evidence collected by ESMA, which is reflected by some of the market participants I speak to, is that investors tend to associate AI with a lack of transparency and accountability, and this represents another barrier to the uptake of these tools.

Banking Is Leading the AI Movement

Banking has been at the forefront of the promotion of AI, and I believe this can help us understand the likely trends that will shape the securities markets industry. Banks, as we know, are increasingly leveraging cloud-based solutions to store and process data and protect systems for cyber threats.

Another area likely to become more widespread is the use of Natural Language Processing (NLP), where large amounts of text are analyzed to identify specific unstructured information, for example to extract information from financial reports. By 2025, it’s estimated that almost 30% of applications of NLP will be carried out inside banking, financial services and insurance.

CySEC already employs similar tools to monitor and supervise the marketing and social media activities of regulated entities for aggressive marketing behaviour. These tools can detect all related mentions from any source globally, covering 187 languages.

Furthermore, we are developing a data-driven supervision framework that will collect a large amount of data (EMIR/SFTR/MiFIR data) and use AI-tools to analyze the information collected to guide us in our supervisory practices. Likewise, ESMA has begun to use AI in its work to monitor market abuse.

"In particular, ESMA [European Securities and Markets Authority] intends to … Ensure efficient use of modern analytical tools, for the ESMA direct supervision mandates and by NCAs, in particular by carrying out projects using novel technologies , such as artificial intelligence… pic.twitter.com/2a1RuqPI3I

— Joshua Rosenberg (@_jrosenberg) June 15, 2023

One of the ways CySEC is gaining a better understanding of these technologies is through the creation of a Regulatory Sandbox. This will allow firms to conduct controlled testing of new innovations, services and products, while CySEC can consider potential risks and how to protect future investors.

To prepare for the transition and maintain Europe’s competitiveness, the industry will need to invest in infrastructure, resources and talent, bringing in the expertise that will create and operate these technologies.

Additionally, regulators need to accelerate their focus on ensuring compliance and protecting investors. And both regulators, companies and investors need more evidence that will help decrease the wariness that many market participants feel to ensure the future growth of the industry.

This is why CySEC is among the regulators that welcome the prospect of a clear and evidence-based framework for the effective and trustworthy use of AI.